Navigating Model Versioning: Tools and Tips for Applied Data Scientists

Why Versioning and What is it?

In the fast-paced world of data science, where experimentation is key and models evolve rapidly, maintaining version control is paramount. Without proper versioning, tracking changes, reproducing results, and collaborating effectively can quickly become daunting tasks.

In this comprehensive guide, we’ll delve into the importance of model versioning and introduce you to a suite of tools and tips to streamline your workflow. These tools have been invaluable in previous projects, such as:

- Replacing GPT with NER and Open Source: During this project, we utilized Git & Docker for version control to track changes and collaborate effectively with team members.

- Using T5 to Balance Textual Dataset: We leveraged Conda to ensure consistent enviornments in different team member’s machines.

- Hyperparameters and Fine-Tuning: Weights & Biases played pivotal role in registering metadata and keeping track of different hyperparameters experiments.

From Conda for consistent environment management to DVC for data versioning, we’ll explore each tool’s role in the model development lifecycle. Whether you’re a seasoned data scientist or a newcomer to the field, this blog post will equip you with the knowledge and tools you need to master model versioning and elevate your data science projects to new heights.

Conda: Your Consistent Environment Companion

In the ever-evolving landscape of data science, maintaining consistent environments across different machines and team members is crucial. Conda comes to the rescue by providing a powerful environment and package management system. With Conda, you can create isolated environments for your projects, ensuring that dependencies are consistent and reproducible. Whether you’re working on a solo project or collaborating with a team, Conda simplifies environment setup and management, allowing you to focus on what matters—building and refining your models.

Docker: Reproducibility Made Easy

In the realm of data science, ensuring reproducibility across different platforms and environments is paramount. Docker emerges as a game-changer by offering a seamless solution for packaging your entire environment, dependencies, and code into a container. With Docker, you can eliminate compatibility issues and ensure that your models run smoothly regardless of the underlying infrastructure.

One remarkable feature of Docker is its integration with popular development tools like Visual Studio Code (VSCode). By attaching VSCode to a Docker container, you can develop directly within the container environment, maintaining consistency throughout your workflow. This capability not only enhances reproducibility but also streamlines the development process, allowing for a more efficient and collaborative coding experience.

Git: Version Control Nirvana

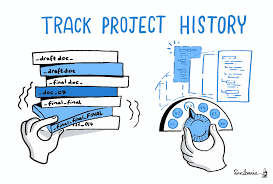

Enter Git, the cornerstone of version control systems (VCS) in the software development world. Git empowers data scientists and developers alike to track changes to their codebase, collaborate seamlessly with teammates, and maintain a reliable history of their projects.

With Git, you can create branches to work on new features or experiments without fear of disrupting the main codebase. If something goes awry, Git’s branching and merging capabilities make it easy to roll back to a previous state or incorporate changes from multiple contributors. Plus, platforms like GitHub and GitLab provide robust collaboration features, enabling teams to review, comment, and iterate on code with ease.

Whether you’re working on a solo project or part of a larger team, Git ensures that your code is organized, accessible, and versioned effectively. Say goodbye to the days of lost changes and tangled codebases—Git brings order and clarity to your development workflow, empowering you to focus on what you do best: building exceptional models and algorithms.

Version Control at it's best

Tracking Model Experimentation and Deployment: Weights and Biases vs. MLflow

In the realm of model experimentation and deployment, having the right tools to track experiments, metadata, and deployments is essential. Two popular platforms that excel in this regard are Weights and Biases (W&B) and MLflow.

Weights and Biases

Weights and Biases (W&B) provides a comprehensive suite of tools for tracking experiments, visualizing results, and managing metadata. With W&B, data scientists can log hyperparameters, metrics, and output visualizations, enabling them to gain deep insights into their model’s performance and behavior. Additionally, W&B offers seamless integration with popular machine learning frameworks like TensorFlow and PyTorch, making it easy to incorporate into existing workflows.

One of the standout features of W&B is its simplicity and ease of use. Setting up experiments and logging data is straightforward, allowing data scientists to focus on their models rather than wrangling with complex tracking systems. Moreover, W&B provides collaborative features, enabling teams to share and discuss results in real-time, fostering a culture of transparency and knowledge sharing.

MLflow

MLflow, on the other hand, offers a comprehensive platform for managing the end-to-end machine learning lifecycle. From experiment tracking to model packaging and deployment, MLflow streamlines the entire process, ensuring reproducibility and scalability at every step.

One of the key strengths of MLflow is its flexibility and extensibility. With support for multiple programming languages and frameworks, data scientists can leverage MLflow across a wide range of projects and environments. Additionally, MLflow’s model registry facilitates model deployment and management, allowing teams to easily transition models from experimentation to production.

When comparing W&B and MLflow, both platforms offer robust solutions for tracking model experimentation, metadata, and deployment. The choice between the two ultimately comes down to specific use cases, preferences, and existing workflows. Whether you opt for the simplicity of Weights and Biases or the comprehensive capabilities of MLflow, both platforms empower data scientists to track, manage, and deploy models with confidence and efficiency.

MLOps Flow Scheme

DVC: Versioning Data like a Pro

In the world of machine learning, versioning not only applies to code but also to data. Managing different versions of datasets, tracking changes, and ensuring reproducibility are critical tasks that data scientists face daily. This is where Data Version Control (DVC) shines.

DVC is a powerful tool specifically designed for versioning data and managing machine learning workflows. It works seamlessly with Git, allowing data scientists to version datasets alongside their code. With DVC, you can track changes to data files, collaborate with team members, and reproduce experiments with ease.

One of the key features of DVC is its ability to handle large datasets efficiently. Instead of storing multiple copies of data files, DVC uses lightweight metafiles to track changes, significantly reducing storage overhead. This makes it ideal for projects with massive datasets or frequent updates.

Moreover, DVC integrates seamlessly with existing data science workflows. Whether you’re using Jupyter Notebooks, Python scripts, or deep learning frameworks like TensorFlow or PyTorch, DVC can be easily integrated into your workflow, providing versioning capabilities without disrupting your existing tools and processes.

In conclusion, DVC is a valuable addition to any data scientist’s toolkit, offering robust versioning capabilities for data and seamless integration with existing workflows. By using DVC, data scientists can ensure the reproducibility and reliability of their experiments, making it easier to collaborate with team members and maintain the integrity of their machine learning projects.

Conclusion

In the dynamic world of data science, mastering model versioning is essential for success. By leveraging tools like Conda, Docker, Git, Weights and Biases, MLflow, and DVC, data scientists can streamline their workflows, ensure reproducibility, and collaborate effectively with team members. Whether you’re tracking code changes, experimenting with new models, or versioning data, these tools provide the foundation for a robust and efficient development process. By incorporating these tools into your workflow, you can elevate your data science projects to new heights and drive meaningful insights from your data.

If you have any questions, feedback, or would like to learn more about how these tools can enhance your data science projects, don’t hesitate to reach out to me. I’m here to help you navigate the complexities of model versioning and empower you to succeed in your data science journey. Feel free connect with me on LinkedIn Dvir Lafer. I look forward to hearing from you and supporting your data science endeavors!

Happy modeling!